Motivation

One widely used metric to measure the performance of a render engine is frames per second. I will show you in this tutorial how to implement a class to perform this measurement.

FrameCounter Class

Source Code

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Runtime.InteropServices;

namespace Apparat

{

public class FrameCounter

{

[DllImport("Kernel32.dll")]

private static extern bool QueryPerformanceCounter(

out long lpPerformanceCount);

[DllImport("Kernel32.dll")]

private static extern bool QueryPerformanceFrequency(

out long lpFrequency);

#region Singleton Pattern

private static FrameCounter instance = null;

public static FrameCounter Instance

{

get

{

if (instance == null)

{

instance = new FrameCounter();

}

return instance;

}

}

#endregion

#region Constructor

private FrameCounter()

{

msPerTick = (float)MillisecondsPerTick;

}

#endregion

float msPerTick = 0.0f;

long frequency;

public long Frequency

{

get

{

QueryPerformanceFrequency(out frequency);

return frequency;

}

}

long counter;

public long Counter

{

get

{

QueryPerformanceCounter(out counter);

return counter;

}

}

public double MillisecondsPerTick

{

get

{

return (1000L) / (double)Frequency;

}

}

public delegate void FPSCalculatedHandler(string fps);

public event FPSCalculatedHandler FPSCalculatedEvent;

long now;

long last;

long dc;

float dt;

float elapsedMilliseconds = 0.0f;

int numFrames = 0;

float msToTrigger = 1000.0f;

public float Count()

{

last = now;

now = Counter;

dc = now - last;

numFrames++;

dt = dc * msPerTick;

elapsedMilliseconds += dt;

if (elapsedMilliseconds > msToTrigger)

{

float seconds = elapsedMilliseconds / 1000.0f;

float fps = numFrames / seconds;

if (FPSCalculatedEvent != null)

FPSCalculatedEvent("fps: " + fps.ToString("0.00"));

elapsedMilliseconds = 0.0f;

numFrames = 0;

}

return dt;

}

}

}

QueryPerformanceFrequency and QueryPerformanceCounter

To count the milliseconds during a render cycle, I use the two native methods QueryPerformanceFrequency and QueryPerformanceCounter. QueryPerformanceFrequency returns, how many ticks the high-resolution performance counter of your CPU makes in a second, you have to determine the frequency of your system just once because this value will not change on your system. QueryPerformanceCounter returns the number of current ticks of your system. To measure the number of ticks during a certain time span you have to get the number of ticks of your system at the beginning and the end of this time span and calculate the difference between the two.

Because you know how many ticks your system makes in a second, you can calculate the time span between the two measurements.

Count Function

Let's have a look at the Count function in detail:

long now;

long last;

long dc;

float dt;

float elapsedMilliseconds = 0.0f;

int numFrames = 0;

float msToTrigger = 1000.0f;

public float Count()

{

last = now;

now = Counter;

dc = now - last;

numFrames++;

dt = dc * msPerTick;

elapsedMilliseconds += dt;

if (elapsedMilliseconds > msToTrigger)

{

float seconds = elapsedMilliseconds / 1000.0f;

float fps = numFrames / seconds;

if (FPSCalculatedEvent != null)

FPSCalculatedEvent("fps: " + fps.ToString("0.00"));

elapsedMilliseconds = 0.0f;

numFrames = 0;

}

return dt;

}

Every time this function gets called, I get the current value of the counter by calling the Counter Property. The previous value of the counter is assigned to the variable last and I calculate the difference dc of the two counter values, which is the number of ticks performed in the time span between two calls to this function. Because I calculated how many milliseconds it takes between two ticks (msPerTick), I can multiply dc with msPerTick to get the time span dt in milliseconds between two calls of this function.

The time span dt gets added to the variable elapsedMilliseconds. Furthermore I increment the variable numFrames with every call to the Count function. If elapsedMilliseconds is greater than the predefined time span msToTrigger, I calculate the frames per second fps and fire the event FPSCalculatedEvent.

I call the Count function in every render cycle in the RenderManager:

The time span dt gets added to the variable elapsedMilliseconds. Furthermore I increment the variable numFrames with every call to the Count function. If elapsedMilliseconds is greater than the predefined time span msToTrigger, I calculate the frames per second fps and fire the event FPSCalculatedEvent.

I call the Count function in every render cycle in the RenderManager:

FrameCounter fc = FrameCounter.Instance;

public void renderScene()

{

while (true)

{

fc.Count();

DeviceManager dm = DeviceManager.Instance;

dm.context.ClearRenderTargetView(dm.renderTarget, new Color4(0.75f, 0.75f, 0.75f));

Scene.Instance.render();

dm.swapChain.Present(syncInterval, PresentFlags.None);

}

}

FPSCalculatedEvent

I defined a delegate and an event in the FrameCounter class:

public delegate void FPSCalculatedHandler(string fps); public event FPSCalculatedHandler FPSCalculatedEvent;

The event gets fired in the Count function, when the frames per secondes have been calculated. I'll get back to this delegate and event when it comes to presenting the fps on the RenderControl.

SyncInterval

Let's take a look at the render loop again:

I introduced the variable syncInterval when calling the Present method of the swap chain.

The value of syncInterval determines, how the rendering is synchronized with the vertical blank.

If syncInterval is 0, no synchronisation takes place, if syncInterval is 1,2,3 or 4, the frame is rendered after the nth interval (MSDN docs).

Furthermore I implemented a method to switch the syncInterval in the RenderManager externally:

This SwitchSyncInterval method is called in the RenderControl and you can switch the syncInterval with the F2 key:

public void renderScene()

{

while (true)

{

fc.Count();

DeviceManager dm = DeviceManager.Instance;

dm.context.ClearRenderTargetView(dm.renderTarget, new Color4(0.75f, 0.75f, 0.75f));

Scene.Instance.render();

dm.swapChain.Present(syncInterval, PresentFlags.None);

}

}

I introduced the variable syncInterval when calling the Present method of the swap chain.

The value of syncInterval determines, how the rendering is synchronized with the vertical blank.

If syncInterval is 0, no synchronisation takes place, if syncInterval is 1,2,3 or 4, the frame is rendered after the nth interval (MSDN docs).

Furthermore I implemented a method to switch the syncInterval in the RenderManager externally:

int syncInterval = 1;

public void SwitchSyncInterval()

{

if (syncInterval == 0)

{

syncInterval = 1;

}

else if (syncInterval == 1)

{

syncInterval = 0;

}

}

This SwitchSyncInterval method is called in the RenderControl and you can switch the syncInterval with the F2 key:

private void RenderControl_KeyUp(object sender, KeyEventArgs e)

{

if (e.KeyCode == Keys.F1)

{

CameraManager.Instance.CycleCameras();

}

else if (e.KeyCode == Keys.F2)

{

RenderManager.Instance.SwitchSyncInterval();

}

CameraManager.Instance.currentCamera.KeyUp(sender, e);

}

Displaying Frames per Second

I added a label control called DebugTextLabel to the RenderControl in order to display a string on top of the RenderControl to have a method to display text. Rendering text seems to be a bit more complicated with DirectX 11 than it was with DirectX 9. (If you know a good reference for rendering text in DirectX 11, please leave a comment). I will use this interim solution for displaying text until I wrote a parser for true type fonts ;)

The delegate and event for sending the calculated frames per second is defined in the FrameCounter class (see above) and the event is fired when the frames per second are calculated.

The method Instance_FPSCalculatedEvent in the class RenderControl is a handler for the FPSCalculatedEvent and is registered in the constructor of the RenderControl:

This is the code for the handler Instance_FPSCalculatedEvent in the RenderControl:

The label is set with the string fps, that comes as an argument from the event. Because the render loop works in a different thread than the DebugTextLabel was created in and we try to set this control from the render loop thread, we have to use the InvokeRequired property of the RenderControl.

The delegate and event for sending the calculated frames per second is defined in the FrameCounter class (see above) and the event is fired when the frames per second are calculated.

The method Instance_FPSCalculatedEvent in the class RenderControl is a handler for the FPSCalculatedEvent and is registered in the constructor of the RenderControl:

public RenderControl()

{

InitializeComponent();

this.MouseWheel += new MouseEventHandler(RenderControl_MouseWheel);

FrameCounter.Instance.FPSCalculatedEvent += new FrameCounter.FPSCalculatedHandler(Instance_FPSCalculatedEvent);

}

This is the code for the handler Instance_FPSCalculatedEvent in the RenderControl:

delegate void setFPS(string fps);

void Instance_FPSCalculatedEvent(string fps)

{

if (this.InvokeRequired)

{

setFPS d = new setFPS(Instance_FPSCalculatedEvent);

this.Invoke(d, new object[] { fps });

}

else

{

this.DebugTextLabel.Text = fps;

}

}

The label is set with the string fps, that comes as an argument from the event. Because the render loop works in a different thread than the DebugTextLabel was created in and we try to set this control from the render loop thread, we have to use the InvokeRequired property of the RenderControl.

Results

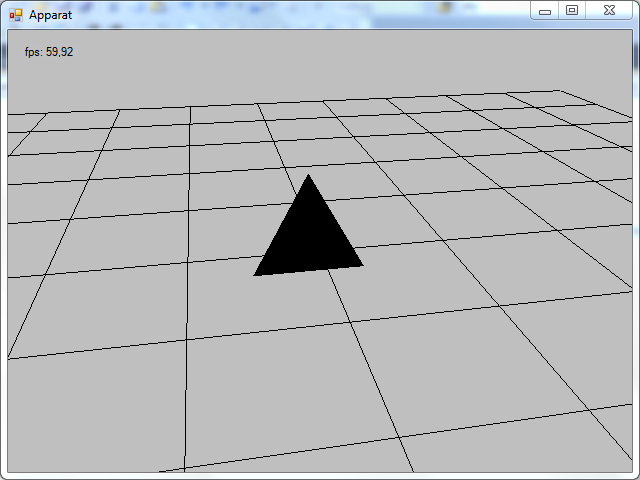

Now we can display the current frame rate of the render engine:

|

| ~60 Frames per Second with SyncInterval = 1 |

|

| Several thousand Frames per Second with SyncInterval = 0 |

To play around a bit, insert a Thread.Sleep(ms)statement to the method renderScene in the RenderManager class and observe how the frame rate changes with different values for ms and depending on if you use syncInterval = 1 or syncInterval = 0. Also try to set the syncInterval in the render loop to values of 2,3,4 and observe the effect on the frames per second.

The source code to this tutorial is here.

Have fun!

The source code to this tutorial is here.

Have fun!